A Explosion of Genetic Data Brings Errors That Grow

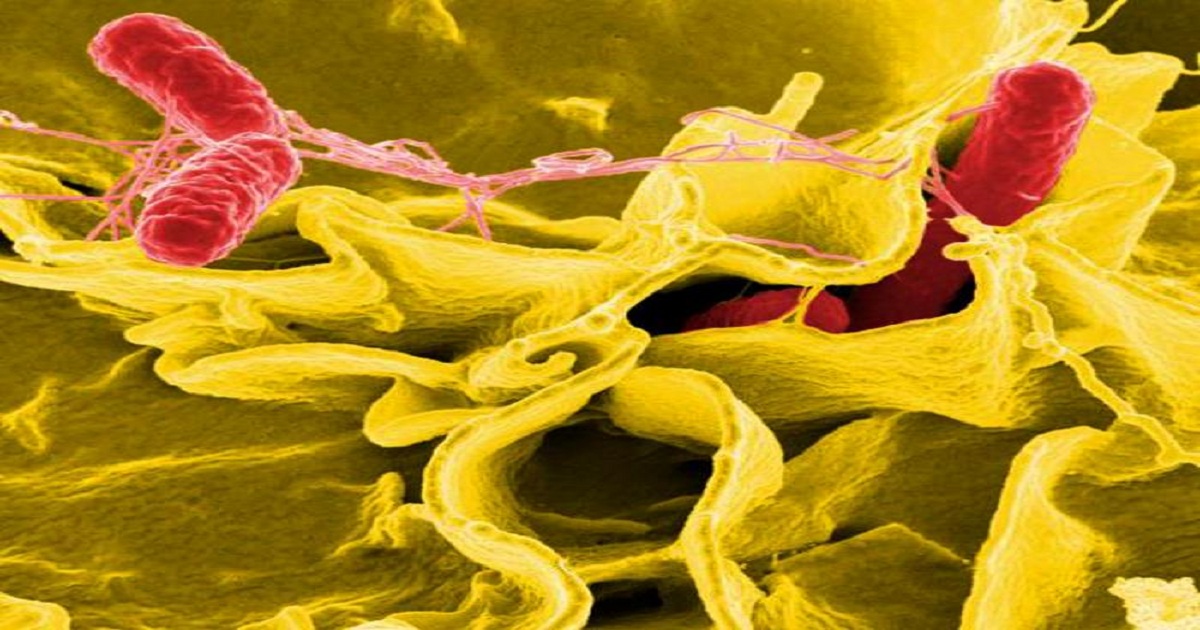

A team of researchers at Washington State University (WSU) wanted to know the minimum proteins that were required for gram-negative microbes called Proteobacteria to survive. The team compiled a dataset of 2,300 bacterial genomes, containing sequences for nearly nine million proteins, which were grouped together when similar. As they began to assess those sequences, they started finding errors in genomic data that is publicly available and used by scientists, including them. Their study, which will probably have major implications for future research, has been reported in Frontiers in Microbiology. "Just in the last two years, researchers have sequenced more than twice the number of bacterial genomes as they did in the twenty years before that," said Shira Broschat, a professor in the School of Electrical Engineering and Computer Science at WSU. A tool for genetic sequencing was pioneered in 1977 by Frederick Sanger and colleagues, commercialized, and brought into the research lab, where it had huge impacts. It took over ten years and $2.7 billion to sequence the human genome that way. Recent years have seen the advent of a new tool: next-gen sequencing, which is described in the video, and is able to deliver a human genome sequence in under an hour for $1500. One can imagine that this new technology immediately created a staggering amount of new genetic data.